Details

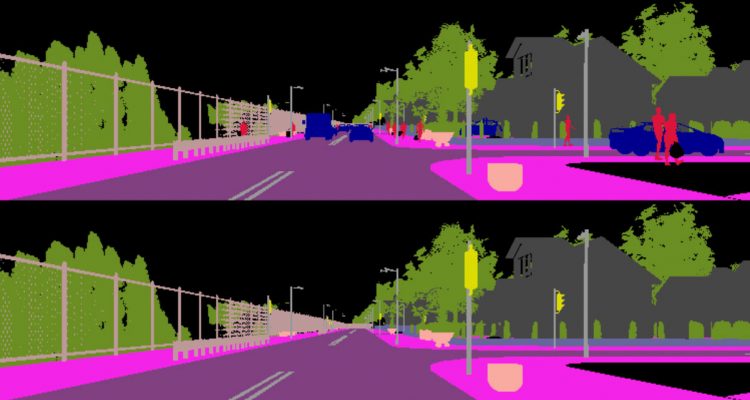

We collected a synthetically generated dataset using the CARLA simulator, a driving simulator based on the physics engine Unreal Engine.We run different simulations with CARLA, collecting both rendered RGB frames and synthetically generated semantic segmentations.

In order to acquire both scenes with and without dynamic objects, we first run the simulation inserting vehicles and pedestrians with an autopilot and we store all the driving commands generated by the autopilot. These commands are then used to run a second identical simulation without dynamic objects.

A different simulation is run for 1000 frames from each spawning point of both the available maps in CARLA.

Simulations are run at 3 FPS and subsampled to take an image every 3 seconds to ensure diversity. Occasional unalignments between the two versions of the data (e.g. due to car accidents) are removed in a post processing step.In total we have collected 11,913 frames.

Dynamic objects included in the current version of CARLA (v0.8.2) are Cars and Pedestrians. We plan to collect additional data when new object classes will become available in CARLA.

The full dataset can be downloaded at the following links::

- RGB data (17.5 GB)

- Semantic segmentations (274MB)

Publications: if you use this dataset please cite our paper:

@incollection{berlincioni2019road,

title={Road layout understanding by generative adversarial inpainting},

author={Berlincioni, Lorenzo and Becattini, Federico and Galteri, Leonardo and Seidenari, Lorenzo and Del Bimbo, Alberto},

booktitle={Inpainting and Denoising Challenges},

pages={111--128},

year={2019},

publisher={Springer}

}