Insights

The project aims to develop computer vision solutions based on supervised machine learning that support behavior analysis and prediction of actions and scene evolution for the purpose of autonomous driving.

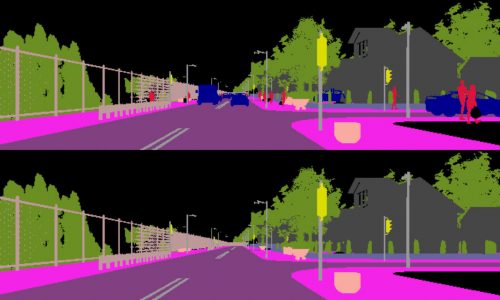

Understanding and predicting behaviors is one of the hardest task to solve with computer vision. Advances in computer vision driven by the availability of powerful GPU-based computational support and the achievements in deep network technology have provided encouraging results that make this problem technically addressable with reasonable expectations of effective implementations in the real world. Particularly, Long Short Term Memory networks (LSTM) are a special kind of recurrent network, capable of reasoning about previous events in a temporal sequence to inform later ones, and learning long-term dependencies. In [1] we have proposed a network model named ProgressNet that uses LSTM networks trained on sequences of convolutional neural network features to predict, given the context, how far a human action has progressed. Grounding on our recent research findings and experience with ProgressNet we propose to model the complex dynamics of the scene by learning to represent both the context and each visible object instance. While human actions are sequences of body poses and interactions with the environment, in an automotive scenario the behaviour of other drivers has to be inferred by means of speed and orientation variations of their vehicle, which can only be observed from the outside. Road layout inferred by inpainting [2] will be exploited as an additional cue for trajectory prediction