Details

Description: Neural networks with memory capabilities have been introduced to solve several machine learning problems which require to model sequential data such as time series. The most common models are Recurrent Neural Networks and their variants such as Long-Short Term Memories (LSTM) and Gated Recurrent Units (GRU). More recently, alternative solutions exploiting Transformers or Memory Augmented Neural Networks (MANN) have been suggested to overcome the limitations of RNNs. They have distinguishing characteristics that make them substantially different from the early models. The former directly compares the elements in the input sequence through self-attention mechanisms. The latter uses an external element-wise addressable memory. Differently from RNNs, in MANNs, state to state transitions are obtained through read/write operations and a set of independent states is maintained. An important consideration of Memory Augmented Networks the number of parameters is not tied to the size of the memory. They have been defined both with episodic and permanent external memories. The course will discuss these new memory networks models and their applications.

Institution: University of Florence

Department: Media Integration and Communication Center (MICC)

ECTS: 2

Level: PhD

Course schedule: Friday 30/4, 10.00 – 12.00 CET, Friday 7/5, 10.00 – 12.00 CET

Contents:

- Memory Networks

- Memory Networks models: RNN, LSTM; GRU; LSTM Applications and drawbacks

- Memory Augmented Networks

- Memory Augmented models: Neural Turing Machine; MANN; End-to-End Memory Networks; KV Memory Networks

- MANTRA

- Transformers

Language: English

Participation mode: Webex

Participation terms: Free of charge

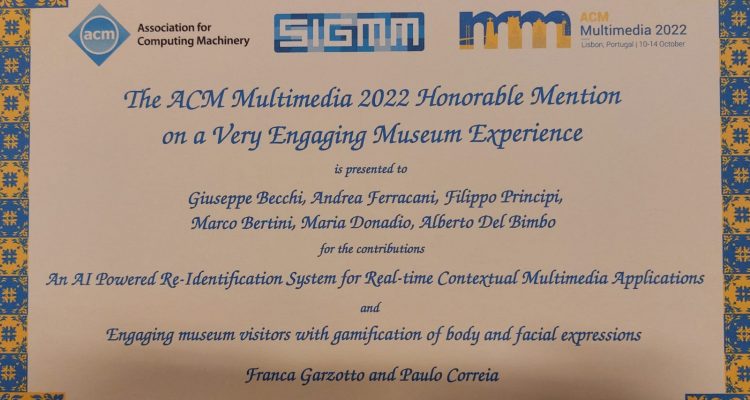

Lecturer: Prof. Alberto Del Bimbo, Federico Becattini