Details

Pedestrian detection is a core problem in computer vision that sees broad application in video surveillance and, more recently, in advanced driving assistance systems.

Despite its broad application and interest, it remains a challenging problem in part due to the vast range of conditions under which it must be robust.

Pedestrian detection at nighttime and during adverse weather conditions is particularly challenging, which is one of the reasons why thermal and multispectral approaches have been become popular in recent years.

In this paper, we propose a novel approach to domain adaptation that significantly improves pedestrian detection performance in the thermal domain.

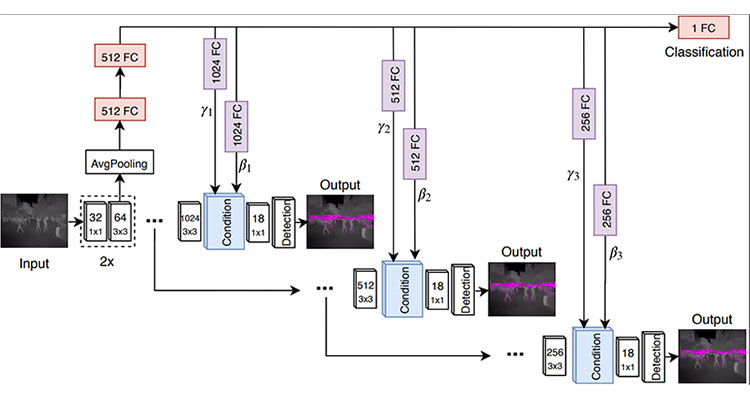

The key idea behind our technique is to adapt an RGB-trained detection network to simultaneously solve two related tasks. An auxiliary classification task that distinguishes between daytime and nighttime thermal images is added to the main detection task during domain adaptation.

The internal representation learned to perform this classification task is used to condition a YOLOv3 detector at multiple points in order to improve its adaptation to the thermal domain.

We validate the effectiveness of task-conditioned domain adaptation by comparing with the state-of-the-art on the KAIST Multispectral Pedestrian Detection Benchmark.

To the best of our knowledge, our proposed task-conditioned approach achieves the best single-modality detection results.