Details

We have seen a rise in video based user communication in the last year, unfortunately fueled by the spread of COVID-19 disease.

Efficient low-latency delay of transmission of video is a challenging problem which must also deal with the segmented nature of network infrastructure not always allowing a high throughput.

Lossy video compression is a basic requirement to enable such technology widely. While this may compromise the quality of the streamed video there are recent deep learning based solutions to restore quality of a lossy compressed video.

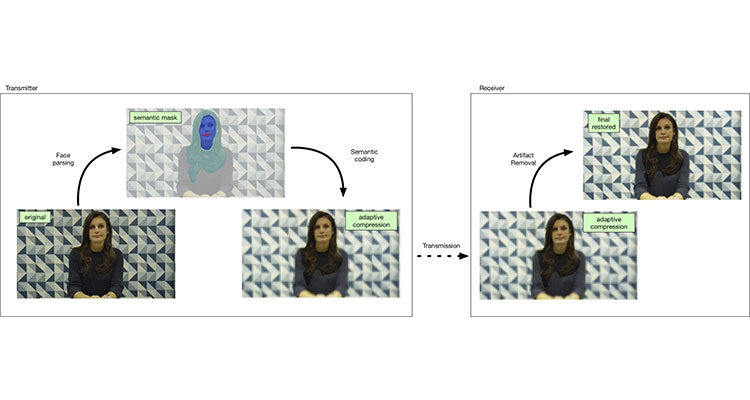

Considering the very nature of video conferencing, bitrate allocation in video streaming could be driven semantically, differentiating quality between the talking subjects and the background. Currently there have not been any work studying the restoration of semantically coded video using deep learning.

In this work we show how such videos can be efficiently generated by shifting bitrate with masks derived via computer vision and how a deep generative adversarial network can be trained to restore video quality. Our study shows that the combination of semantic coding and learning based video restoration can provide superior results.