The ability to interpret the semantics of objects and actions, their individual geometric attributes as well as their spatial and temporal relationships within the environment is essential for an intelligent visual system and extremely valuable in numerous applications. In visual recognition, the problem of categorizing generic objects is a highly challenging one. Single objects vary in appearances and shapes under various photometric (e.g. illumination) and geometric (e.g. scale, view point, occlusion, etc.) transformations. Largely due to the difficulty of this problem, most of the current research in object categorization has focused on modeling object classes in single (or nearly single) views. But our world is fundamentally 3D and it is crucial that we design models and algorithms that can handle such appearance and pose variability.

Ing. Silvio Savarese: Visual recognition in the three dimensional world

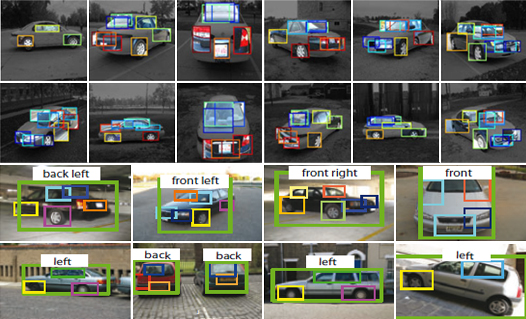

In this talk I will introduce a novel framework for learning and recognizing 3D object categories and their poses. Our approach is to capture a compact model of an object category by linking together diagnostic parts of the objects from different viewing points. The resulting model is a summarization of both the appearance and geometry information of the object class. Unlike earlier attempts for 3D object categorization, our framework requires minimal supervision and has the ability to synthesize unseen views of an object category. Our results on categorization show superior performances to state-of-the-art algorithms on the largest dataset up to date. I will conclude the talk with final remarks on the relevance of the proposed research for a number of applications in mobile vision.