Federico Bartoli received his master’s degree in computer science from the University of Florence, Italy. He is currently a PhD candidate at the Media Integration and Communication Center. His research interests include pattern recognition and computer vision, specifically pedestrian detection and person re-identification. Lately, he is working with state-of-the-art person re-identification descriptors to augmented museum visitors experience for personalized multimedia content delivery and real-time methods to speed-up the detection process for surveillance and critical scenarios.

Author Archives: admin

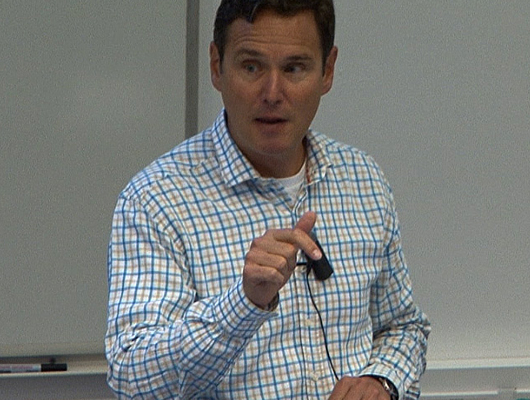

Action! Improved Action Recognition and Localization in Video

In this talk, Prof. Stan Scarloff of the Department of Computer Science at Boston University will describe works related to action recognition and localization in video.

Abstract: the Web images and video present a rich, diverse – and challenging – resource for learning and testing models of human actions.

In the first part of this talk, he will describe an unsupervised method for learning human action models. The approach is unsupervised in the sense that it requires no human intervention other than the action keywords to be used to form text queries to Web image and video search engines; thus, we can easily extend the vocabulary of actions, by simply making additional search engine queries.

Action! Improved Action Recognition and Localization in Video

In the second part of this talk, Prof. Scarloff will describe a Multiple Instance Learning framework for exploiting properties of the scene, objects, and humans in video to gain improved action classification. In the third part of this talk, I will describe a new representation for action recognition and localization, Hierarchical Space-Time Segments, which is helpful in both recognition and localization of actions in video. An unsupervised method is proposed that can generate this representation from video, which extracts both static and non-static relevant space-time segments, and also preserves their hierarchical and temporal relationships.

This work was conducted in collaboration with Shugao Ma and Jianming Zhang (Boston U), and Nazli Ikizler Cinbis (Hacettepe University).

The work also appears in the following papers:

- “Web-based Classifiers for Human Action Recognition”, IEEE Trans. on Multimedia

- “Object, Scene and Actions: Combining Multiple Features for Human Action Recognition”, ECCV 2010

- “Action Recognition and Localization by Hierarchical Space-Time Segments”, ICCV 2013

Alberto Del Bimbo keynote speech at CBMI 2013

The 11th International Content Based Multimedia Indexing Workshop (CBMI 2013) brings together the various communities involved in all aspects of content-based multimedia indexing, retrieval, browsing and presentation. It is the second occasion when CBMI has been hold in Eastern Europe in historical town of Veszprém between 17 and 19 June 2013.

Alberto Del Bimbo, MICC Director, was one of the invited keynote speaker. His keynote was about “Social Media Annotation”.

Abstract: the large success of online social platforms for creation, sharing and tagging of user-generated media has lead to a strong interest by the multimedia and computer vision communities in research on methods and techniques for annotating and searching social media. Visual content similarity, geo-tags and tag co-occurrence, together with social connections and comments, can be exploited to perform tag suggestion as well as to perform content classification and clustering and enable more effective semantic indexing and retrieval of visual data. However there is need to countervail the relatively low quality of these metadata user produced tags and annotations are known to be ambiguous, imprecise and/or incomplete, overly personalized and limited – and at the same time take into account the ‘web-scale’ quantity of media and the fact that social network users continuously add new images and create new terms. We will review the state of the art approaches to automatic annotation and tag refinement for social images and discuss extensions to tag suggestion and localization in web video sequences.

Objects and Poses

In this talk, Prof. Stan Scarloff of the Department of Computer Science at Boston University will describe works related object recognition and pose inference.

In the first part of the talk, he will describe a machine learning formulation for object detection that is based on set representations of the contextual elements. Directly training classification models on sets of unordered items, where each set can have varying cardinality can be difficult; to overcome this problem, he proposes SetBoost, a discriminative learning algorithm for building set classifiers.

In the second part of the talk, he will describe efficient optimal methods for inference of the pose of objects in images, given a loopy graph model for the object structure and appearance. In one approach, linearly augmented tree models are proposed that enable efficient scale and rotation invariant matching.

In another approach, articulated pose estimation with loopy graph models is made efficient via a branch-and-bound strategy for finding the globally optimal human body pose.

This work was conducted in collaboration with Hao Jiang (Boston College), Tai-Peng Tian (GE Research Labs), Kun He (Boston U) and Ramazan Gokberk Cinbis (INRIA Grenoble), and appears in the following papers: “Fast Globally Optimal 2D Human Detection with Loopy Graph Models” in CVPR 2010 (pdf download), “Scale Resilient, Rotation Invariant Articulated Object Matching” in CVPR 2012 (pdf download), “Contextual Object Detection using Set-based Classification” in ECCV 2012 (pdf download).

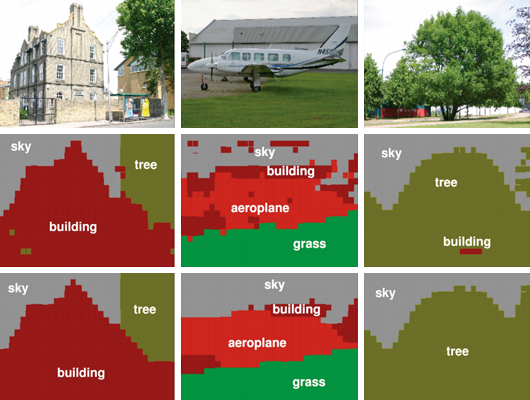

Segmentation Driven Object Detection with Fisher Vectors

Jakob Verbeek of Lear Team at INRIA, Grenoble, France will present an object detection system based on the powerful Fisher vector (FV) image representation in combination with spatial pyramids computed over SIFT descriptors.

To alleviate the memory requirements of the high dimensional FV representation, we exploit a recent segmentation-based method to generate class-independent object detection hypotheses, in combination with data compression techniques. Our main contribution, however, is a method to produce tentative object segmentation masks to suppress background clutter. Re-weighting the local image features based on these masks is shown to improve object detection significantly. To further improve the detector performance, we additionally compute these representations over local color descriptors, and include a contextual feature in the form of a full-image FV descriptor.

In the experimental evaluation based on the VOC 2007 and 2010 datasets, we observe excellent detection performance for our method. It performs better or comparable to many recent state-of-the-art detectors, including ones that use more sophisticated forms of inter-class contextual cues.

Additionally, including a basic form of inter-category context leads, to the best of our knowledge, to the best detection results reported to date on these datasets.

This work will be published in a forthcoming ICCV 2013 paper.

UDOO chooses MICC as beta tester

The founders of one of the last successful innovative projects funded on the Kickstarter platform has chosen our center MICC as one of the beta testers for the board UDOO which will begin to be distributed in September.

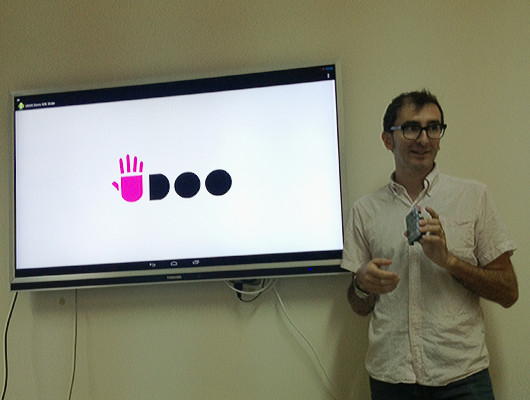

Maurizio Caporali presenting UDOO at MICC

Maurizio Caporali, CEO di Aidilab showed us the potential of UDOO at our center on Friday, July 19 delivering to us a beta version board.

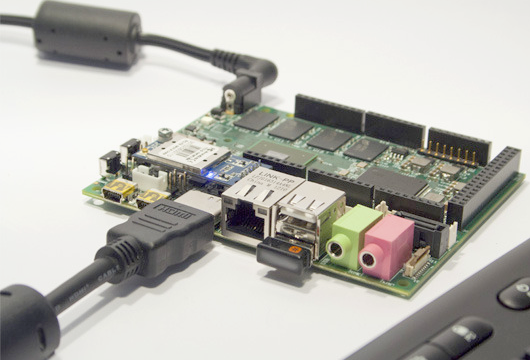

UDOO is a multi development platform solution for Android, Linux, Arduino™ and Google ADK 2012. The board is designed to provide a powerful prototyping board for software development and design in order to explore the new frontiers of the Internet of Things. UDOO allows you to switch simply between Linux and Android by replacing the Micro SD card and rebooting the system.

The UDOO development board

UDOO is a joint effort of SECO USA Inc. (www.seco.com ) and Aidilab (www.aidilab.com), in collaboration with a multidisciplinary team of researchers with expertise in interaction design, embedded electronics. We interviewed UDOO to learn more about their project.

Searching with Solr

Eng. Giuseppe Becchi, Wikido events portal founder, will hold a technical seminar about SOLR on Monday 2013 July 8 at Media Integration and Communication Center entitled “Searching with Solr”.

Abstract: What is Solr and how it came about.

- What is Solr and how it came about.

- Why Solr? Reliable, Fast, Supported, Open Source, Tunable Scoring, Lucene based.

- Installing & Bringing Up Solr in 10 minutes.

Solr is the popular, blazing fast open source enterprise search platform from the Apache Lucene project. Its major features include powerful full-text search, hit highlighting, faceted search, near real-time indexing, dynamic clustering, database integration, rich document (e.g., Word, PDF) handling, and geospatial search.

During the meeting, we’ll see how to: install and deploy solr (also under tomcat)

- configure the two xml config files: schema.xml and solrconfig.xml

- connect solr to different datasource: xml, mysql, pdf (using apach tika)

- configure a tokenizer for different languages

- configure result scoring and perform a faceted and a geospacial search.

- configure and perform a “morelikethis” search

Material: it is recommended to participate bringing a PC with the Java 1.6 or greater and Tomcat installed. The tutorial file that will be used during the seminar can be downloaded at http://it.apache.contactlab.it/lucene/solr/4.3.1/ (the solr-4.3.1.tgz file)

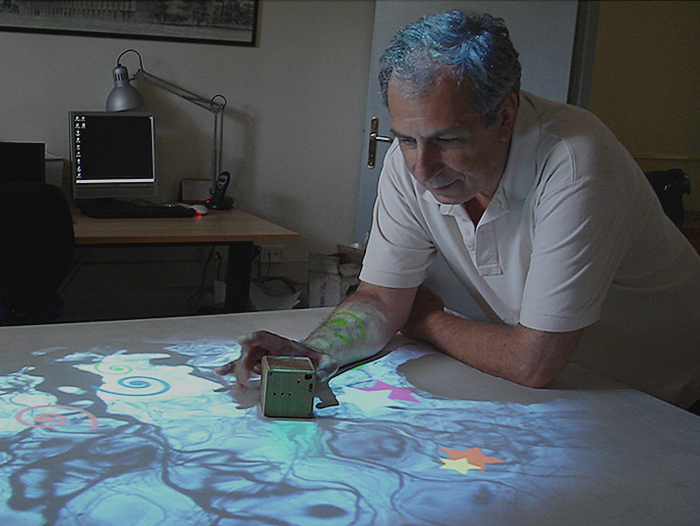

Introduction to Oblong Industries’ Greenhouse SDK

Eng. Alessandro Valli, Software Engineer at Oblong Industries, will hold a technical seminar about Greenhouse SDK on Friday 2013 June 21 at Media Integration and Communication Center.

Greenhouse is a toolkit to create interactive spaces: multi-screen, multi-user, multi-device, multi-machine interfaces leveraging gestural and spatial interaction. Working knowledge of C++ and OpenGL is recommended but not required. Greenhouse is available now for Mac OS X and Linux (soon) and is free for non-commercial use.

Introduction to Hadoop

Eng. Niccolò Becchi, Wikido events portal founder, will held a technical seminar about Apache Hadoop on Monday 2013 June 17 at Media Integration and Communication Center.

Abstract:

- what is it and how it came about;

- who uses it and what for;

- the map-reduce: an application in many small pieces;

- and especially when you may agree to use it even if you do not work at Facebook?

Hadoop is a tool that allows you to run scalable applications on clusters consisting of tens, hundreds or even thousands of servers. It is currently used by Facebook, Yahoo, LastFm and many realities that have the need to work on gigabytes or even petabytes of data.

At the core of the framework is the paradigm of the Map-Reduce. Developed internally at Google on its distributed filesystem it was created to respond to his need for parallel processing of large amounts of data. Hadoop is Google’s open source version of their software which anyone can use for processing data on his servers or, possibly, on the Amazon cloud (consuming some credit card!).

During the meeting, you will see the first steps of map-reduce paradigm. In this kind of programming many (but not all) algorithms are rewritable. We will look at some tools that increase productivity in the Map-Reduce application development.

Material: it is recommended to participate bringing a PC with the Java Development Environment installed (JDK >= 1.6) and the Hadoop package (downloadable from: http://hadoop.apache.org/releases.html (#Download) > then choose 1.1.X > current stable version, 1.1 release)

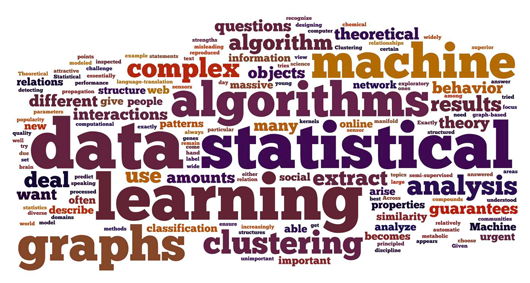

Social Media Annotation

The large success of online social platforms for creation, sharing and tagging of user-generated media has lead to a strong interest by the multimedia and computer vision communities in research on methods and techniques for annotating and searching social media.

Visual content similarity, geo-tags and tag co-occurrence, together with social connections and comments, can be exploited to perform tag suggestion as well as to perform content classification and clustering and enable more effective semantic indexing and retrieval of visual data.

However there is need to countervail the relatively low quality of these metadata user produced tags and annotations are known to be ambiguous, imprecise and/or incomplete, overly personalized and limited – and at the same time take into account the ‘web-scale’ quantity of media and the fact that social network users continuously add new images and create new terms.

We will review the state of the art approaches to automatic annotation and tag refinement for social images and discuss extensions to tag suggestion and localization in web video sequences.

Lecturer: Marco Bertini