Abstract

Abstract

Posterity logging in support of forensic analysis of video surveillance footage can be thought of as saving a compact, semantically relevant snapshot of a scene that might be relevant for future, postmortem analysis of an event or series of events. Faces, particularly high-resolution, high-quality imagery of faces, can be a powerful semantic cue for forensic analysis of video footage, and if properly associated with observation of individual persons in the video, can be used to associate identities with people in footage where their face is not visible. In this paper we present a real-time solution for posterity logging of face images in video streams. Our system is capable of detecting and tracking multiple targets in real time, grabbing face images and evaluating their quality in order to store only the best for each detected target. We propose two quality measures for face imagery, one based on symmetry and the other on face pose. Extensive qualitative and quantitative evaluation of the performance of our system is provided on many hours of realistic surveillance footage captured in different environments. Results demonstrate that our system manages to balance the need to obtain face images of all people in a scene, while simultaneously minimizing false positives and identity mismatches.

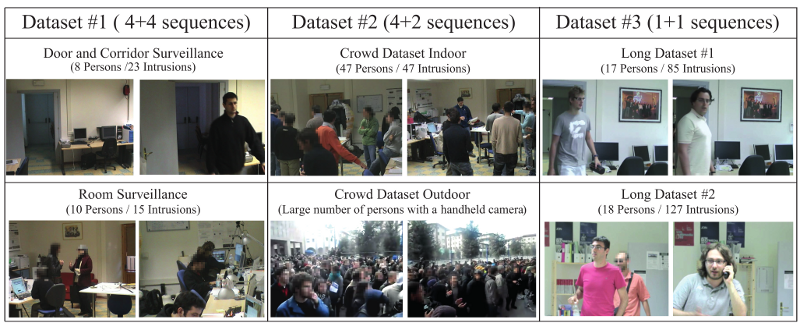

Dataset and Labeled Annotations:

The face logging system was tested on 3 datasets (16 sequences for a total of about 11 hours):

- The sequences in the first dataset were recorded from a typical door or corridor surveillance vantage point. In these cases, it is quite easy to acquire good images of target faces, unless they are intentionally concealed or the person is only visible from the back.

- In the second dataset several other sequences were taken in very crowded environments such as would occur in real life video surveillance scenarios, both indoor and outdoor, where occlusions, cast shadows, and frequent changes of the head pose are very common.

- The third dataset is composed of two sequences, each of about five hours, taken in an indoor environment and designed to test the capability of the system to work over very long periods of time. The second and third datasets are specifically designed to provide a quantitative evaluation of our approach and to demonstrate that it can track and log for an entire workday, about ten hours in total.

Dataset 1 and 2 are encoded wit Microsoft-Mpeg4 v3. Dataset 3 is encoded the xVid Codec.

Note that we can not provide the Crowd Outdoor Sequences for the dataset #2

Provided Software:

- We provide a MATLAB script that is able to open the video and to read the labeled annotations. We strongly suggest to install matlab-video4linux to read videos files from matlab in any platform. Our script depends on this tool to see the video and the tool depends on OpenCV.

- We provided a compiled version of the mex interface MATLAB of matlab-video4linux for Linux x86_64.

- We provide a scrip to start MATLAB and to use matlab-video4linux.

- The third dataset contains very long sequences (up to 5 hours). We have made available a change-detection filefor each of those in a CSV (Comma Separated Value) format and SSV (Space Separated Value) that can easy read to skip frame where nothing happens. We have encoded that new video skipping those frames with the name “LongMICCX_motion.avi”. Note that the annotations for the long sequences are relative to the “LongMICCX_motion.avi” video file. Note that the motion-detection files have this format:

{frame number , [0/1] }

1 , 0

2 , 0

3 , 0

4 , 1

so on….

0 means no motion while 1 motion detected.

Download Dataset, annotations and sw ~ 3GB.

Results and Supplementary Material:

Video Results of face logging approach in the fourth seq. of second Dataset:

Face logging results on seq.#4 second dataset from the MICC face loggin datset.

Video comparison with Ross’s Tracker (Section II-B of the paper):

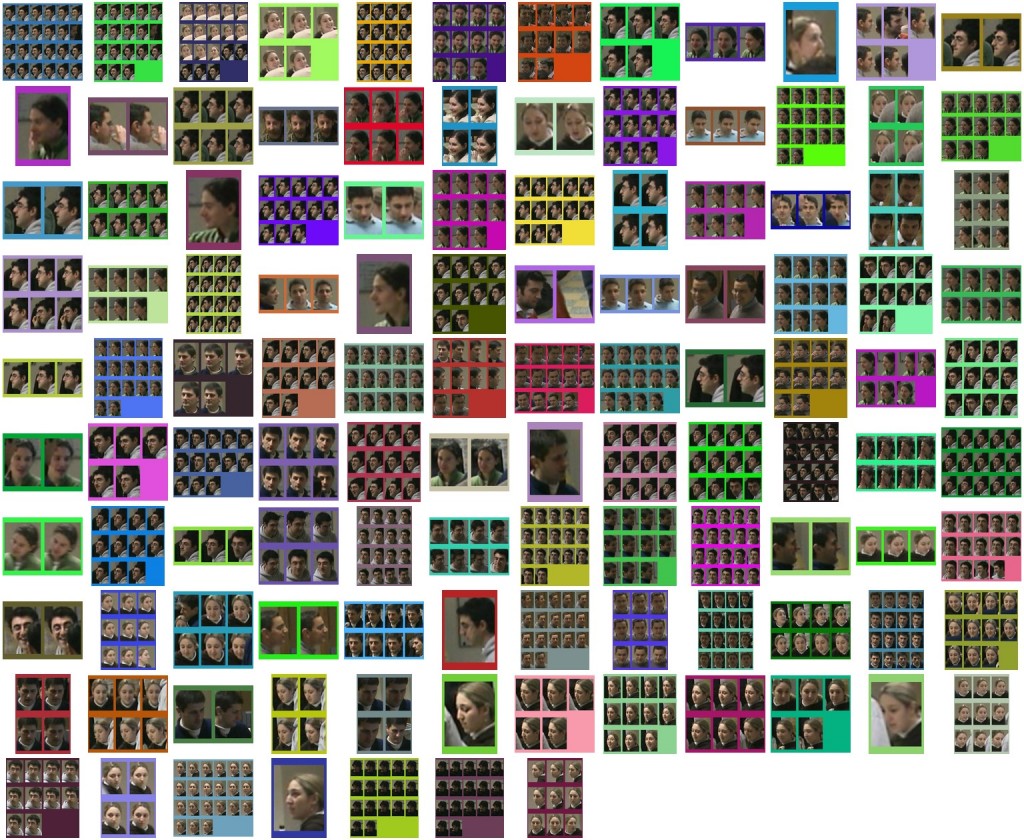

Still images of sequence #4 of Dataset #2:

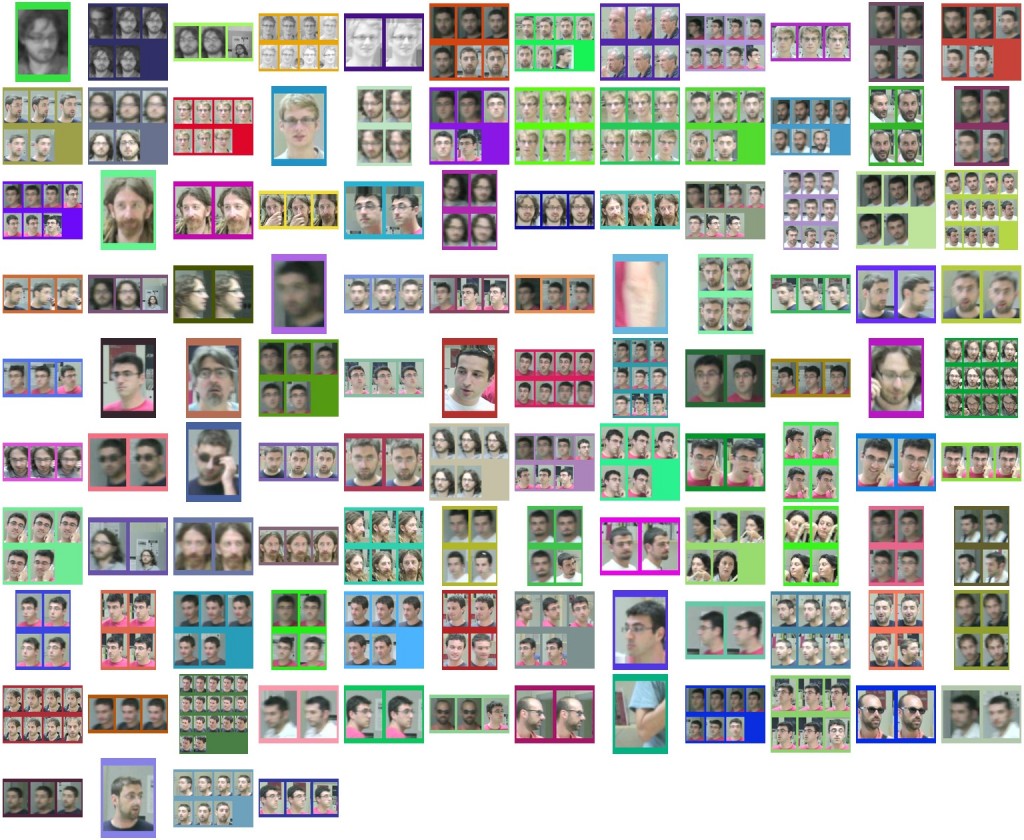

Still images of Long Sequences of Dataset #3:

References:

[1] David Ross, Jongwoo Lim, Ruei-Sung Lin, Ming-Hsuan Yang. “Incremental Learning for Robust Visual Tracking”, In the International Journal of Computer Vision, Special Issue: Learning for Vision, 2007. Code available at http://www.cs.toronto.edu/~dross/ivt/

[2] Alberto Del Bimbo, Fabrizio Dini, “Particle filter–based visual tracking with a first order dynamic model and uncertainty adaptation” Computer Vision and Image Understanding (CVIU), June, 2011. The dataset for tracking is available at http://www.micc.unifi.it/dini/research/particle-filter-based-visual-tracking/

[3] Alberto Del Bimbo, Fabrizio Dini and Giuseppe Lisanti, “A real time solution for face logging”, In Proc. of International Conference on Imaging for Crime Detection and Prevention (ICDP), 2009.

The dataset is maintained by Iacopo Masi and Giuseppe Lisanti, email: mas…@dsi.unifi.it and lisa…@dsi.unifi.it.